Artificial Intelligence (AI) has been a hot topic in the business world for years. Visionaries never tire of painting the future in the most dazzling colours. Bill Gates recently predicted that AI will significantly change healthcare in five years – new fodder for imagination and AI adoption.

The first attempts at artificial intelligence solutions and machine learning (ML) were not always convincing. But with OpenAI’s ChatGPT (GPT-3, GPT-4), that changed in one fell swoop at the end of 2022. This is convincing artificial intelligence that is freely available and that offers convincing results – and the dreaming started again. Generative AI (GenAI) was suddenly on everyone’s lips. Today, over 100 million people use ChatGPT almost every day and are enthusiastic about the possibilities for content creation and AI-generated results. The chatbot offers capabilities beyond a typical Internet search and is able to compile and condense information, create code, and engage in amusing dialogues.

Generative AI is a sub-topic of artificial intelligence. The name indicates what it was originally intended for: the generation of new content such as text, images, videos, etc. – for which it has previously been trained with similar patterns and data. The aim is to mimic the patterns and styles found in its training data, allowing it to replicate and innovate in various formats. Essentially, GenAI bridges the gap between data input and creative output, enabling AI to not just analyse but also to create.

What if companies had such powerful digital assistants? What if employees relieved themselves of unpleasant tasks such as researching on the intranet, taking project minutes, and preparing presentations? What would that mean for productivity in the company?

Back in 2019, Bloomberg reported that the company generates 30 percent of its journalistic content using artificial intelligence. In early April 2023, the company caused a stir with its BloombergGPT large language model. The company’s AI teams used PyTorch, a Python-based deep learning tool, for the development and fed it with financial market data that has been collected in over 40 years of corporate history: its crown jewels. This resulted in a total of 363 billion so-called tokens for training the model.

The Bloomberg team used Amazon Web Services (AWS) to train its foundation model (FM). They rented 64 servers, each with 8 NVIDIA A100 GPUs. These ran for 53 days (!) to develop the model. The result: a specific FM for the financial market, a completely new type of financial research and analysis tool that can now be continuously developed and that is revolutionising Bloomberg’s offerings.

From another perspective, the Bloomberg example shows very clearly the effort to generate artificial intelligence. Companies not only need expertise but also the right data and the necessary comprehensive computing capacities for training. Clouds are the best choice for this from a technical and business perspective. FMs are the crux of artificial intelligence development. Most of the financial and time effort flows into machine learning, i.e. the development and training of these basic models, for example into large language models. The fine-tuning and inference work required is relatively small in comparison.

This is changing with the availability of pre-trained FMs, such as large language models. Companies that want to develop specific AI for their application scenarios no longer need to take the time-consuming route of reinventing the wheel. They can use existing FMs as a generative AI tool to further develop them for their specific tasks. Clouds such as AWS offer all the necessary AI tools for training and developing (Fig. 1). This massively accelerates the provision of AI applications. In addition to specially designed infrastructures and frameworks, AWS also offers access to various FMs. A particular strength when working with pre-trained FMs from the cloud is the simple roll-out of a proof of concept to a productive environment.

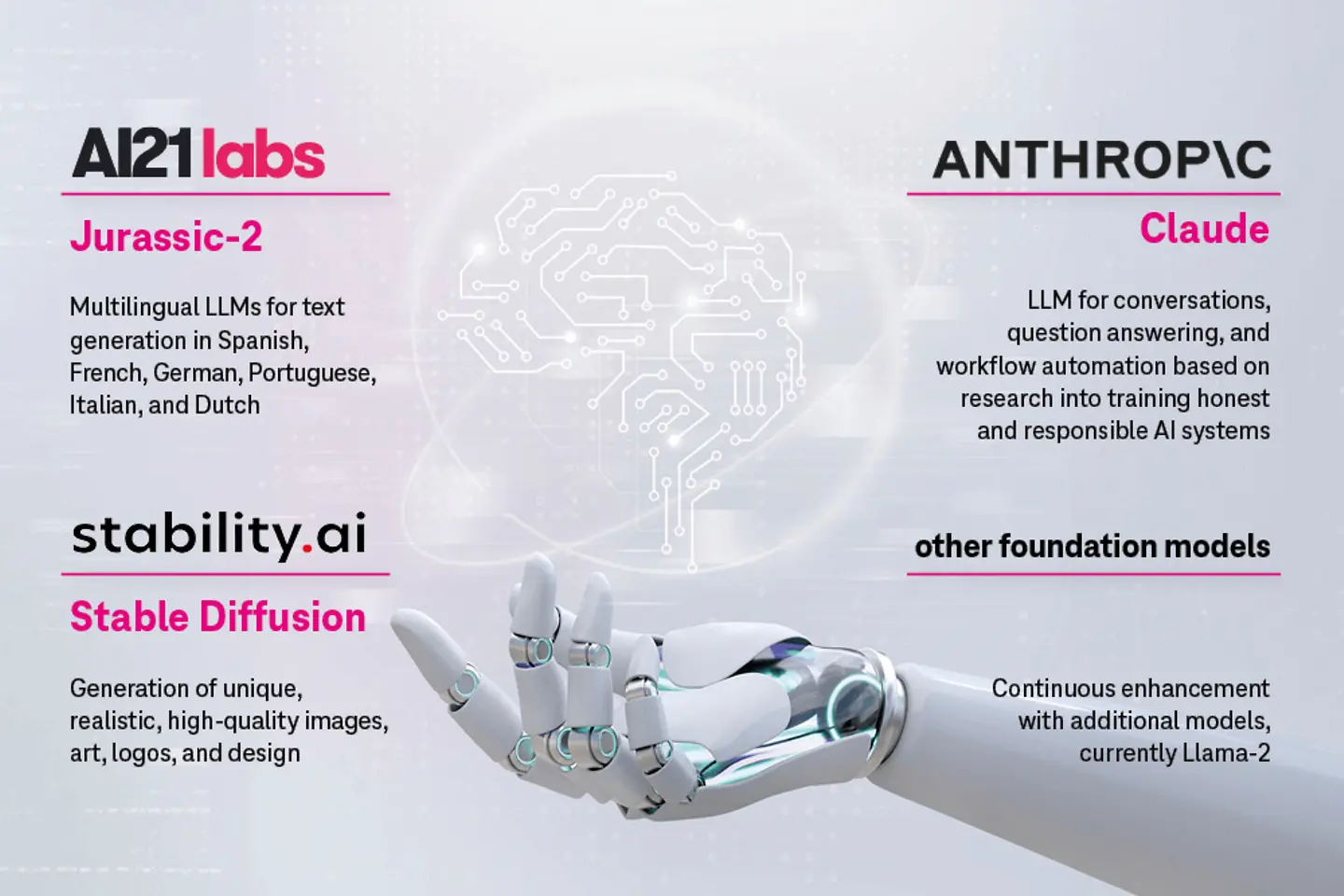

The FMs include the in-house Titan Text and Titan Embeddings, as well as Jurassic-2, Claude, and Stable Diffusion, which were published by well-known AI start-ups. Llama-2 is also available. Companies can use these FMs to develop their own GenAI solutions very quickly. Amazon Bedrock gives users access to AI services via an API. It is not necessary to set up individual infrastructure. With the wide selection, the appropriate foundation model can be selected depending on the purpose of the generative AI to be developed.

The speed at which AI and GenAI are currently developing is breathtaking. At the same time, the quality of the available AI services is also increasing with the general further development. Companies that ignore the AI phenomenon, especially against the backdrop of the looming shortage of skilled professionals, will lose competitiveness in the coming years. It is important to develop a strategy for the use of AI and to identify potential use cases within the company. In addition to these prospective aspects, companies should above all gain experience in dealing with AI – as users and as developers.

Only in some cases will it be useful and necessary to develop your own FMs from scratch. The availability of many of the necessary building blocks significantly reduces development costs. It is now more important to involve process experts in the development of artificial intelligence. Only the issue of data remains essential. For specific AI systems that are intended to support company processes, training/fine-tuning with the company’s own data will be essential. This represents the intellectual property of the company. Its leakage must be prevented at all costs during training. Security concepts are essential for this.

With Pathfinder, a generative AI proof of concept, T-Systems offers customers an easy introduction to the world of generative AI development on AWS. In a secure sandbox environment, teams can explore the capabilities of GenAI and chat with their own data sources through pre-trained foundation models such as Llama-2, Titan, and Claude 2 over a period of two weeks. The customer’s internal data remains within the AWS environment – with full access to the complete portfolio of AWS capacities for AI-powered systems.