Let’s face it, amidst the generative artificial intelligence (GenAI) hype, many businesses are left grappling with the harsh reality of underwhelming outcomes for ambitious initiatives. Enterprises are constantly challenged to cut through the noise and turn AI challenges into competitive advantages. Therefore, to redefine success in your next Enterprise Artificial Intelligence project, it’s high time to rethink your strategy.

The explosive rise of GenAI and Large Language Models (LLMs) is unprecedented, with OpenAI’s ChatGPT amassing 100 million users in just months. The Artificial Intelligence landscape is evolving rapidly, since the beginning of 2024, OpenAI’s leading position is being challenged by powerful new models like Anthropic’s Claude 3, Mistral AI’s Large Model or new developments like in the field of Large Action Models (LAMs), which focus on generating coherent and goal-directed sequences of actions rather than just text.

As tech giants and startups further intensify their AI competition, driving rapid innovation in GenAI and LLMs, the future of this transformative technology remains excitingly unpredictable and businesses need to keep a close eye on the Artificial Intelligence market, separating the buzz from the real deal to spot genuine opportunities that make sense for them.

From boardrooms to C-suites, the question on everyone’s lips continues to be: “What’s our GenAI strategy, how are our initiatives doing, and what is the ROI?”

Europe’s expenditure on AI-centric systems was estimated at $34.2 Bn in 2023 across all industries with a compound annual growth rate (CAGR) of 29.6% until 2027, according to IDC. The spending on LLM and GenAI was initially fueled by grassroots enthusiasm and a fear of missing out (FOMO), rather than strategically set objectives, prompting a service provider scramble to secure market share.

However, immediate benefits from AI assistants, chatbots, and coding tools have proven tangible. Businesses are now tapping into diverse applications, such as:

With billions invested and a global race to secure AI talent, Gartner projects that by 2026, more than 80% of enterprises will have integrated GenAI APIs or models into their production environments, up from less than 5% in 2023. Despite the widespread belief in LLMs as a universal solution for business hurdles, Forbes points out that 90% of GenAI Proof-of-Concepts (POCs) falter before reaching full-scale production.

Rethinking strategies in an ever-changing terrain: There’s a pervasive notion that advanced AI, particularly Large Language Models, are the silver bullet for all business challenges. However, the reality is that Generative AI is still maturing. Ambiguous objectives and ill-defined issues complicate the translation of business needs into executable AI tasks and the subsequent measurement of success. With the AI landscape in constant flux — showcasing an ever-expanding suite of new models, orchestration libraries, and cloud services — there’s a risk of becoming ensnared in an endless prototyping loop that can erode the bottom line.

The takeaway: The key lies in conducting a rigorous evaluation of potential against complexity, followed by swift, strategic experiments. This iterative and discovery-focused approach avoids premature large-scale investment in use-cases with indistinct requirements or those facing substantial technical hurdles.

Unreliable data, privacy & security concerns: Data quality and security stand at the forefront of the AI-driven enterprise, fueling AI systems that falter without robust, unbiased, and voluminous data. While enterprises, especially within tightly regulated industries, have access to an abundance of text-rich resources, the challenges of data reliability, privacy, and security remain acute. Unauthorized intrusions, data breaches, and cyber-attacks pose severe threats to the integrity and confidentiality of GenAI systems. Ethical dilemmas, such as copyright infringement, intellectual property rights, and ingrained biases from the data used to train AI, have already spurred legal battles, underscoring the imperative for conscientious AI development and deployment.

The takeaway: Ensuring high-quality, unbiased data is fundamental for the effective use of Large Language Models (LLMs), enhancing reliability and ethical use. Companies can access scalable LLM solutions through pay-as-you-go hosting or opt for tailored control with dedicated private infrastructure. Alongside, rigorous data governance and cybersecurity, including regular audits and legal compliance checks, provide a secure framework to meet privacy and security needs in AI deployment.

The cost & complexity conundrum: Implementing GenAI isn’t just about the initial investment, it’s a commitment to ongoing costs. Cloud bills for running large models are high, building your own needs expensive experts and hardware, and even using open-source options requires technical know-how. Guiding GenAI output, system upgrades, specialized talent, data security, ethics, compliance, monitoring, training, and legal considerations all add to the expense. In a lot of cases those upfront investments and running costs are exceeding all cost optimization or revenue benefits, so they are stopped even before the actual roll-out. Also, a lot of Enterprise use-cases require a high degree of customization, the associated complex interplay of LLM orchestration, data integration, retrieval-augmented generation, embedding models, and vector database integration poses a substantial obstacle. Furthermore, the pursuit of swift results can lead teams to underprioritize vital non-functional aspects, such as RAG pipeline scalability, robust model performance, security, and stringent data protection, all leading to technical dept , which needs to be addressed in the future.

The takeaway: Successfully navigating the cost and complexity of implementing GenAI entails conducting detailed analyses to weigh initial investments against long-term benefits. For instance, a retail company investing in AI-driven customer service bots must consider the costs of cloud services, expert personnel, and ongoing maintenance against potential revenue gains from improved customer satisfaction and retention. Collaboration between IT, marketing, and customer service departments ensures alignment of goals and efficient resource allocation. Prioritizing non-functional aspects, such as scalability and security, can be illustrated by a fintech firm implementing AI-based fraud detection systems, where robustness and data protection are paramount. Continuous improvement involves regular evaluations of performance metrics and user feedback, enabling timely adjustments to optimize efficiency and effectiveness.

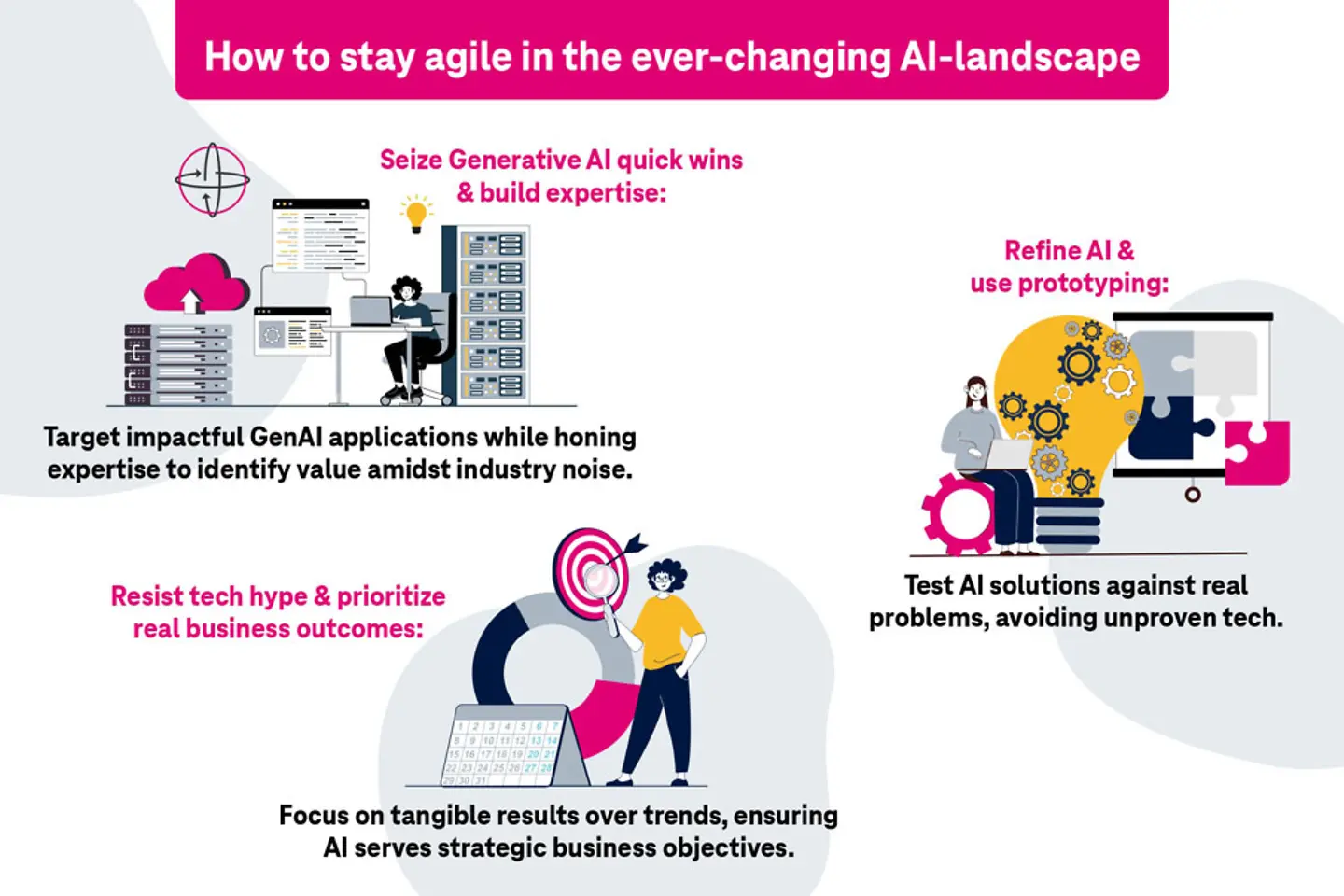

On balance, we believe that businesses should pursue a balanced approach, remaining current with technological developments while ensuring their AI strategies are tightly aligned with concrete business objectives. Some good rules of thumb to consider:

As enterprises continue their journey through the AI landscape, it’s essential to maintain a dual focus: embracing continuous tech evolution while steadfastly pursuing tangible business outcomes.

The bottom line: The strategic integration of AI should not add to the technological noise but rather, through a synergy of innovation and governance, translate into measurable business success.

The journey through the intricate maze of Artificial Intelligence adoption is fraught with challenges, but it is also ripe with opportunity. If you would like to know more about how to transform these challenges into launching pads for innovation and growth, feel free to contact me for a conversation. We look forward to hearing your questions!